Hyperparameters are adjustable settings in machine learning algorithms that control the model’s behavior during training. Unlike model parameters, which are learned from the data during training, hyperparameters are set before the training process begins. Examples of common hyperparameters include learning rate, regularization strength, and the number of hidden layers in a neural network.

In this blog post, we will explore the concept of hyperparameter tuning and its role in MLOps. We will discuss the importance of optimizing machine learning models for improved performance and resource efficiency and delve into best practices for hyperparameter tuning in MLOps.

Understanding Hyperparameters in Machine Learning

Hyperparameters play a crucial role in determining a machine learning model’s performance and its ability to generalize to new data. Choosing appropriate hyperparameter values can have a significant impact on the model’s accuracy, convergence speed, and overall training time. Therefore, understanding the role and characteristics of different hyperparameters is essential for achieving optimal results in machine learning projects.

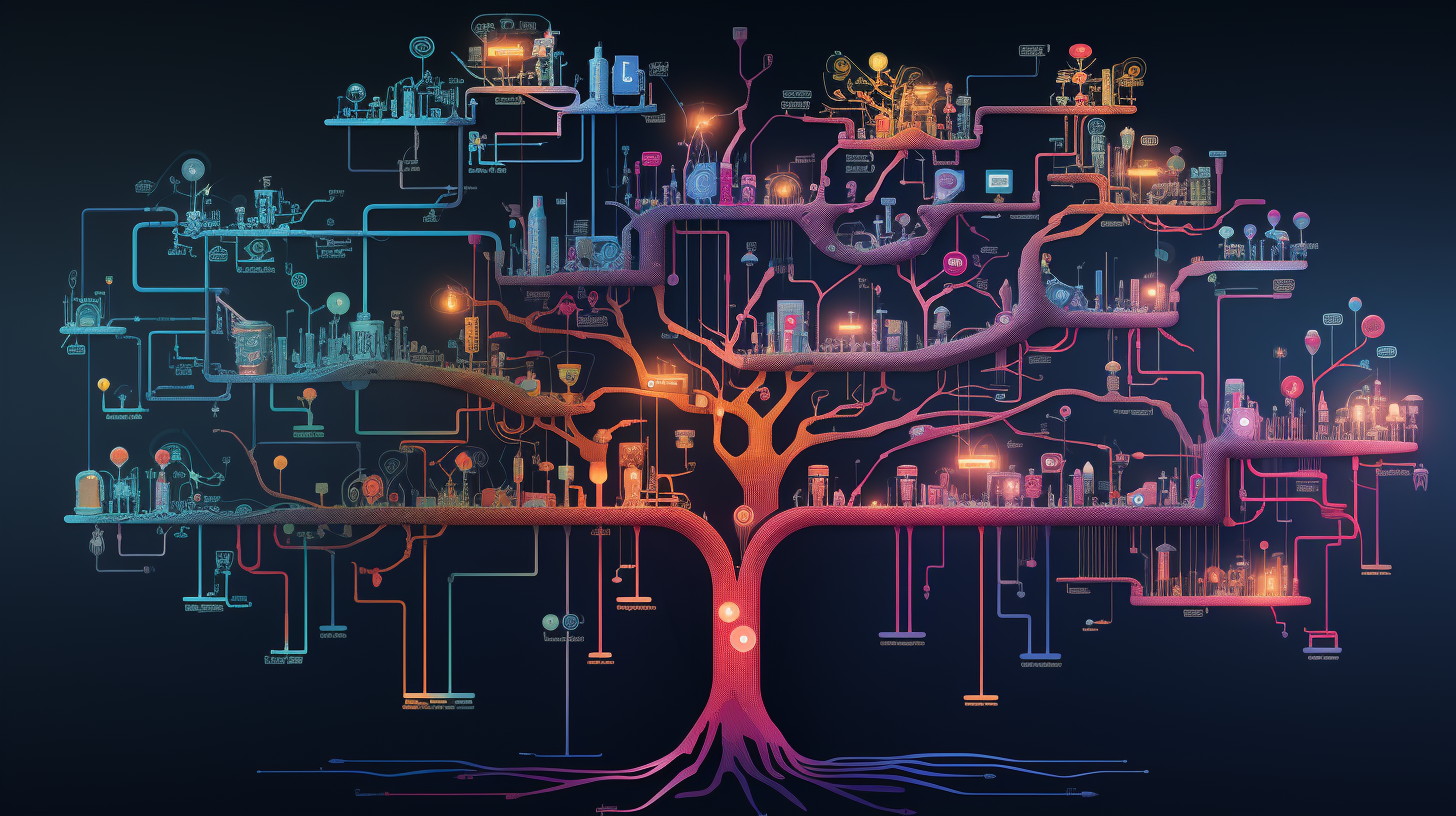

Moreover, developing a good intuition for hyperparameter choices can help practitioners build more efficient MLOps pipelines. By effectively leveraging hyperparameters, machine learning teams can reduce the time spent on iterative model development and achieve faster deployment of high-performing models.

Why Hyperparameter Tuning Matters in MLOps

Hyperparameter tuning is essential for achieving optimal model performance and generalization. By carefully selecting hyperparameter values, machine learning practitioners can develop models that perform well on unseen data. Hyperparameter tuning is also crucial for MLOps, as it contributes to reproducibility, scalability, and automation, ultimately leading to more efficient and reliable machine learning workflows.

In the context of MLOps, hyperparameter tuning ensures that machine learning models are fine-tuned for specific tasks and datasets, which contributes to better overall system performance. By automating and standardizing the tuning process, MLOps pipelines can help teams avoid manual errors, reduce human intervention, and save valuable time and resources.

Furthermore, well-tuned hyperparameters enable machine learning models to adapt to changing data patterns and evolving business requirements. As a result, MLOps pipelines that incorporate effective hyperparameter tuning strategies can deliver more robust and future-proof solutions, ensuring long-term success in real-world applications.

Hyperparameter Tuning Techniques

Several hyperparameter tuning methods are available, each with its own advantages and disadvantages. Some common techniques include:

- Grid search: A systematic exploration of hyperparameter values by trying all possible combinations within a predefined search space.

- Random search: A more efficient approach than grid search, where hyperparameter values are sampled randomly from a specified distribution.

- Bayesian optimization: A probabilistic approach that models the objective function using Gaussian processes and selects the next hyperparameter values based on the acquisition function.

The choice of tuning method depends on the complexity of the problem, the size of the search space, and the available computational resources. While grid search can be exhaustive and computationally expensive, it might be suitable for small search spaces or when high precision is required. On the other hand, random search and Bayesian optimization are more efficient in handling large search spaces, allowing for faster exploration and convergence.

In addition to these techniques, other advanced tuning methods, such as genetic algorithms and reinforcement learning, can also be employed in certain scenarios. These methods can adaptively search the hyperparameter space and offer promising results, especially when dealing with complex and high-dimensional problems. However, they may require more expertise and careful implementation to ensure success in MLOps workflows.

Best Practices for Hyperparameter Tuning in MLOps

To optimize hyperparameter tuning in MLOps, consider the following best practices:

- Use cross-validation: Employ cross-validation to assess model performance during hyperparameter tuning, which helps avoid overfitting and ensures a more accurate estimation of the model’s generalization ability.

- Define appropriate search spaces: Set up search spaces for hyperparameters that reflect realistic ranges, enabling efficient exploration and avoiding extreme or unrealistic values.

- Leverage early stopping and parallelization: Implement early stopping to halt training when performance plateaus, saving time and resources. Use parallelization to run multiple hyperparameter tuning experiments concurrently, further reducing the overall tuning time.

Cross-validation is particularly important in hyperparameter tuning, as it allows for a more reliable estimation of the model’s performance on unseen data. By splitting the dataset into multiple training and validation sets, cross-validation helps to mitigate overfitting and provides a better understanding of the model’s ability to generalize. This information can then be used to guide the selection of optimal hyperparameter values.

Defining appropriate search spaces and leveraging early stopping and parallelization are also critical to ensure efficient hyperparameter tuning. By carefully selecting the search space, practitioners can focus their computational resources on meaningful hyperparameter combinations. Early stopping and parallelization techniques, on the other hand, can help to reduce the time and resources required for tuning, allowing teams to iterate more quickly and deploy optimized models faster.

Tools and Frameworks for Hyperparameter Tuning in MLOps

Several popular libraries and tools can facilitate hyperparameter tuning in MLOps, such as Optuna, Hyperopt, and Scikit-Optimize. These tools integrate seamlessly with MLOps pipelines, enabling collaboration and providing a streamlined experience for machine learning practitioners.

These libraries and tools often come with built-in support for popular machine learning frameworks, such as TensorFlow, PyTorch, and Scikit-learn, making it easy for practitioners to integrate hyperparameter tuning into their existing workflows. They also provide advanced features, such as customizable search spaces, parallelization, and visualization capabilities, which can enhance the tuning process and enable more effective collaboration between team members.

By incorporating these tools into MLOps pipelines, machine learning teams can automate and standardize hyperparameter tuning, making the process more efficient and reproducible. This can lead to better overall model performance and faster deployment of optimized models in production settings.

Monitoring and Evaluating Hyperparameter Tuning Results

Keeping track of hyperparameter tuning experiments and visualizing results is crucial for gaining insights and iterating on the tuning process. Use monitoring and evaluation tools to assess the impact of hyperparameter choices on model performance and to identify trends or patterns that can inform future tuning efforts.

Logging and tracking hyperparameter tuning experiments enable machine learning practitioners to compare different tuning runs, identify the best-performing models, and understand how different hyperparameters influence model performance. Visualization tools, such as TensorBoard, MLflow, or custom dashboards, can provide graphical representations of the tuning results, making it easier to identify trends, spot outliers, and understand the relationships between hyperparameters and model performance.

Monitoring and evaluation tools can also help teams detect potential issues, such as overfitting, underfitting, or convergence problems, early in the tuning process. By addressing these issues proactively, practitioners can refine their tuning strategies and make better-informed decisions, ultimately leading to more efficient and successful hyperparameter tuning efforts in MLOps.

Conclusion

In summary, hyperparameter tuning plays a vital role in optimizing machine learning models and MLOps workflows. By understanding the different tuning techniques, implementing best practices, and leveraging available tools and frameworks, practitioners can improve model performance, generalization, and resource efficiency. Moreover, monitoring and evaluating tuning results can provide valuable insights and help teams iterate more effectively on their tuning strategies. We encourage you to explore and implement these best practices to elevate your machine learning models and MLOps processes.

Learn more about MLOps in our free ebook, Essential MLOps: What You Need to Know for Successful Implementation. Download your copy now.

![Python Decorators Unleashed [eBook]](https://datasciencehorizons.com/wp-content/uploads/2023/10/python_decorators_unleashed_ebook_header-150x150.webp)