Introduction

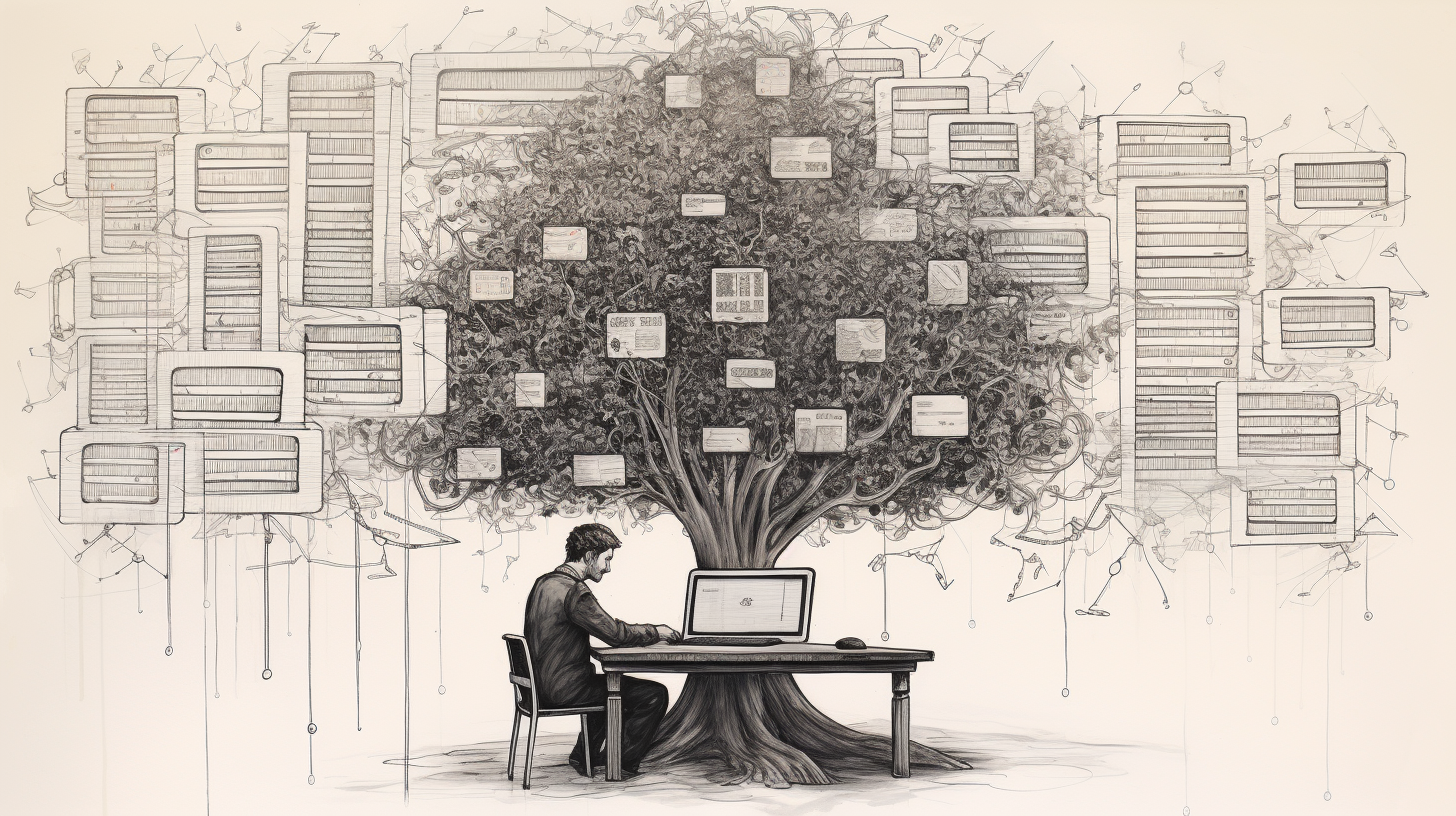

Data pipelines form the backbone of modern data analytics, transforming raw data into actionable insights. These pipelines are sequences of data processing stages, each tasked with a specific function, such as data collection, transformation, or analysis.

The meticulous design and implementation of data pipelines are pivotal in ensuring efficiency, scalability, and reliability in data processing. This comprehensive guide will navigate you through the fundamental concepts, design strategies, and hands-on examples to comprehend and build efficient data pipelines.

Whether it’s small-scale applications or large enterprise systems, data pipelines are indispensable. By adhering to industry best practices and utilizing appropriate tools, one can construct robust pipelines that cater to specific business needs.

Data Pipeline Components

A typical data pipeline encompasses the following components:

Data Ingestion

The initial step in a data pipeline is data ingestion, which involves collecting data from various sources. This data could be streaming data, batch data, or a mix of both. Effective data ingestion processes ensure that data is accurately and reliably collected, and is available for processing. The importance of robust data ingestion cannot be overemphasized, as it sets the stage for the subsequent steps in the pipeline.

Data Transformation

Once data is ingested, it often requires cleaning and transformation to ensure consistency and quality. This step is crucial for eliminating any inaccuracies or inconsistencies in the data that could skew analysis. Data transformation processes may include normalization, aggregation, and other operations that prepare the data for analysis and storage.

Data Storage

Post-transformation, data needs to be stored in a suitable format and storage solution. The choice of storage system – be it a traditional database, a data warehouse, or a data lake, is dictated by the data’s structure, the required latency, and the type of analysis to be performed on the data. Effective data storage ensures that data is easily accessible and securely stored.

Data Analysis

With the data duly stored, data analysis ensues to derive meaningful insights. This phase employs statistical and machine learning methods to interpret the data. The insights garnered could be used to inform business decisions, drive operational improvements, or fuel data-driven strategies.

Data Visualization

The final component of a data pipeline is data visualization, which entails presenting the data in a comprehensible and visually appealing format. By leveraging graphs, charts, and other visualization tools, stakeholders can easily interpret the data and make informed decisions.

Designing a Data Pipeline

Designing a data pipeline necessitates meticulous planning and consideration of various factors to ensure the effective flow and management of data from the point of collection to analysis. Here are the elaborated steps:

1. Understanding the Data Source

The first step in designing a data pipeline is understanding the data source. This involves identifying the types of data you’ll be working with, their origins, and the volume of data to be handled. Understanding the data source is critical as it helps in making informed decisions regarding data ingestion, transformation, and the necessary tools and technologies needed to manage the data efficiently throughout the pipeline.

2. Defining the Data Flow

Defining the data flow involves outlining how data will move through the pipeline. This includes specifying the steps of data ingestion, transformation, storage, analysis, and visualization, and determining how data will transition from one step to the next. A well-defined data flow ensures that data is processed efficiently and accurately, and helps in identifying any potential bottlenecks or points of failure in the pipeline.

3. Selecting the Right Tools

Selecting the right tools is pivotal for the effective implementation and management of the data pipeline. This involves choosing technologies and platforms that are suited to the volume and nature of the data, as well as the processing requirements of the pipeline. The right tools can significantly impact the efficiency, scalability, and maintainability of the data pipeline.

4. Implementing Security Measures

Security is a paramount concern in data pipeline design. Implementing security measures involves ensuring that data is protected at all stages of the pipeline, from ingestion to visualization. This may include data encryption, access controls, and compliance with legal and organizational data protection policies. A secure data pipeline not only protects sensitive data but also builds trust with stakeholders and complies with regulatory requirements.

5. Ensuring Scalability and Performance

Ensuring scalability and performance is crucial for the long-term viability of the data pipeline. Scalability ensures that the pipeline can handle increasing volumes of data and users as the need grows, while performance optimization ensures that data flows through the pipeline swiftly and reliably. This step involves monitoring, tuning, and potentially re-designing parts of the pipeline to meet evolving requirements and maintain a high level of performance as data volume and complexity increase.

By paying heed to these considerations, one can design a data pipeline that is robust, efficient, and capable of delivering valuable insights from data in a reliable and scalable manner.

Example: Building a Simple Data Pipeline

In translating the planning considerations into practice, this example demonstrates how the aforementioned steps in designing a data pipeline are actualized. Utilizing Apache Beam, a unified model for defining both batch and streaming data-parallel processing pipelines, this example provides a practical insight into building a simple data pipeline.

Apache Beam offers SDKs for building and executing pipelines, as well as a variety of I/O connectors for reading from and writing to various data stores. This model alleviates the complexities of distributed processing, allowing developers to concentrate on their processing logic.

Here’s a step-by-step breakdown correlating to the planning steps:

- Identify the Data Source: (1. Understanding the Data Source)

- Locate the input file

input.txtfrom which data will be read. - Define the Transformation: (2. Defining the Data Flow)

- Outline the transformation logic in

TransformFunction()which will be applied to each record from the data source. - Specify the Output: (3. Selecting the Right Tools)

- Determine the location

output.txtwhere the transformed data will be written.

In this manner, the high-level planning steps guide the specific actions required to construct the data pipeline, ensuring a coherent and well-structured process from planning to execution.

Now, let’s dive into the pseudocode of this pipeline:

- Import Apache Beam library

- Create a pipeline object

- Define the data processing steps:

- Read data from

input.txt - Apply the transformation function

- Write the transformed data to

output.txt

- Read data from

- Run the pipeline

And here’s how the code looks:

from apache_beam import Pipeline

# Create a pipeline

pipeline = Pipeline()

# Define the data processing steps

pipeline | 'Read Data' >> ReadFromText('input.txt')

| 'Transform Data' >> TransformFunction()

| 'Write Data' >> WriteToText('output.txt')

# Run the pipeline

pipeline.run()

Implementation Challenges and Solutions

The implementation of data pipelines, although rewarding, can pose various challenges. Each challenge necessitates strategic solutions to ensure the smooth operation of the pipeline. Here’s a deeper look into these challenges and potential solutions:

Data Consistency

Ensuring data consistency across the pipeline is paramount for accurate analysis and decision-making. Challenges in data consistency may arise due to disparate data sources, data duplication, or data drift. Solutions may include implementing robust data validation and transformation processes, employing data versioning, or utilizing tools and frameworks that support data consistency and lineage tracking.

Data Security

Data security is a critical concern, especially when dealing with sensitive or personal information. Challenges may arise from unauthorized access, data breaches, or non-compliance with regulatory standards. Solutions include implementing stringent access control measures, encrypting data both at rest and in transit, and ensuring compliance with industry and legal standards like GDPR or HIPAA.

Scalability

As data volumes and processing needs grow, ensuring the scalability of the pipeline becomes vital. Challenges in scalability may result in performance bottlenecks or increased costs. Solutions entail designing the pipeline with scalability in mind from the outset, employing scalable technologies and architectures, and regularly monitoring and optimizing the pipeline’s performance as data processing demands evolve.

Maintainability

Maintainability is key for the long-term success of a data pipeline. Challenges here may arise from technical debt, code complexity, or inadequate monitoring and logging. Solutions encompass adopting best practices in code design and documentation, implementing comprehensive monitoring and logging systems, and ensuring a clear process for regularly reviewing and updating the pipeline as necessary.

Addressing these challenges head-on with well-thought-out solutions can significantly enhance the efficiency, reliability, and longevity of a data pipeline, thereby enabling organizations to derive greater value from their data assets over time.

Conclusion

Data pipelines are a cornerstone of contemporary data processing, enabling organizations to transmute raw data into actionable insights. By grasping the key components, design principles, and implementation strategies, professionals can erect robust and efficient data pipelines tailored to their unique requirements.

The pragmatic examples and concepts delineated in this article serve as a springboard for anyone keen on delving deeper into data pipeline design and implementation. With a robust understanding and the right toolkit, crafting and maintaining data pipelines becomes a streamlined and rewarding endeavor.

![Python Decorators Unleashed [eBook]](https://datasciencehorizons.com/wp-content/uploads/2023/10/python_decorators_unleashed_ebook_header-150x150.webp)