Large Language Models (LLMs) have brought about a paradigm shift in the field of natural language processing (NLP), opening up innovation in new NLP applications. Despite their potential, developing and deploying LLMs pose significant challenges that require machine learning operations (MLOps) practices for their success.

In essence, MLOps practices combine DevOps principles with machine learning workflows and models to ensure an efficient, reliable, and scalable development process. With LLMs, MLOps practices take on a crucial role in managing their complexity and ensuring their accuracy and reliability.

Size and Complexity Challenges

An LLM’s size and complexity pose significant challenges in developing and deploying LLMs

LLMs can contain billions of parameters that require massive amounts of computational power for training and inference. To manage such complexity, MLOps practices like version control, continuous integration, and automated testing have become essential tools.

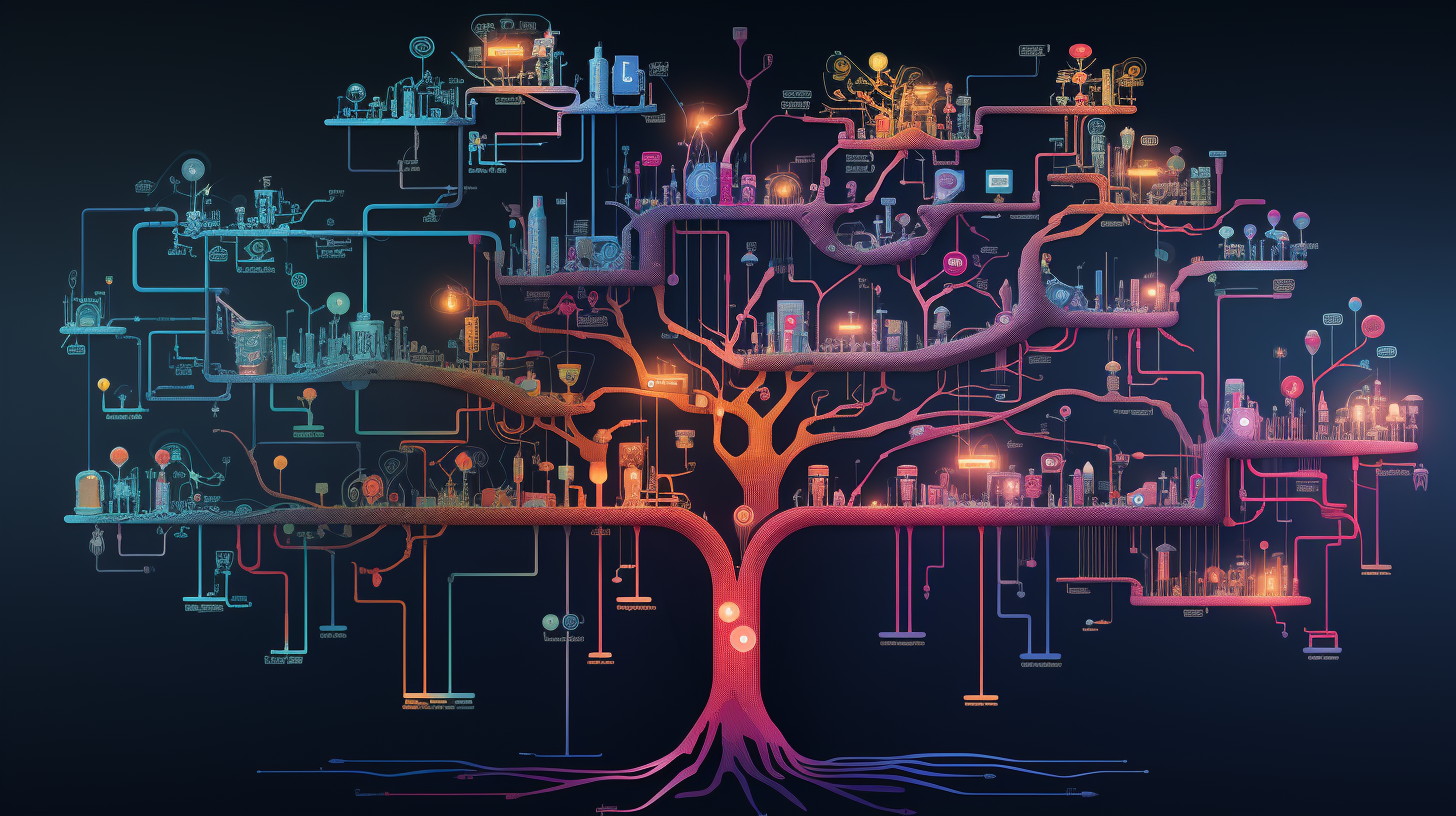

MLOps for LLMs

Version Control

- Allows for tracking changes and revisions

- Ensures that all contributors are working on the same version of the code

- Git is a popular tool for managing version control in LLM projects, enabling experiment reproducibility and the ability to roll back to a previous version if needed

Continuous Integration (CI)

- Enables automated testing of code changes frequently, ensuring that new code is compatible with the existing codebase

- Integration testing is critical for identifying issues and bugs that arise when integrating code changes into the larger codebase

- Jenkins, CircleCI, and Travis CI are popular platforms used in LLM projects

- Automated testing also reduces the chances of errors and ensures the model’s reliability

Monitoring & Alerting

To maintain LLMs’ performance over time, automated monitoring and alerting have become necessary. For instance, new data may become available, or the model may be applied to new use cases, potentially causing performance degradation. MLOps practices, like automated monitoring and alerting, enable early detection of such changes and enable proactive measures to maintain the model’s accuracy. Tools like TensorBoard and MLflow can be used for monitoring and visualization in LLM projects.

Dealing with Bias

However, data bias is a challenge that MLOps practices alone may not solve. Bias in the data can affect the accuracy of the LLMs, and additional measures such as data augmentation, data balancing, and fairness testing may be necessary. Additionally, different types of LLMs, such as generative and discriminative models, may require different MLOps practices to deal with their unique challenges.

Continuous Deployment (CD) and Containerization

Finally, MLOps practices such as continuous deployment and containerization can simplify LLM deployment in production environments. Containerization can encapsulate the models’ development process, making it easy to deploy a single packaged model. This reduces the risks of errors introduced by different deployment environments, making it simpler to deploy LLMs. Kubernetes is a popular platform for container orchestration in LLM projects.

Summary

MLOps practices play a critical role in developing and deploying LLMs. Version control, continuous integration, and automated testing enable efficient and reliable development of these models, while automated monitoring and deployment simplify their maintenance and deployment. However, MLOps practices alone may not address all LLMs challenges, and additional measures may be necessary to mitigate the impact of data bias and address unique challenges posed by different types of LLMs. As LLMs continue to transform NLP applications, MLOps practices will become increasingly critical in enabling more efficient and effective collaboration across teams and organizations, leading to the development of more transparent and trustworthy LLMs that can be audited and validated by stakeholders and regulators.

![Python Decorators Unleashed [eBook]](https://datasciencehorizons.com/wp-content/uploads/2023/10/python_decorators_unleashed_ebook_header-150x150.webp)