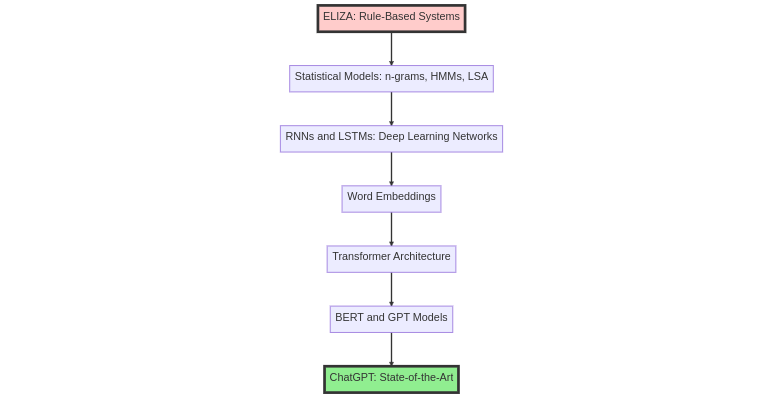

The field of natural language processing (NLP) has experienced a remarkable progression in the development of chatbots, evolving from the early rule-based systems exemplified by ELIZA to the state-of-the-art deep learning models like ChatGPT. In this article, we will explore this evolution and highlight the key advancements that have propelled chatbot technology forward.

Rule-Based Systems: The Beginning

The journey of chatbot development began in the 1960s with the introduction of ELIZA, a rule-based system developed by Joseph Weizenbaum at MIT. ELIZA simulated conversation by using pattern matching and substitution techniques to generate responses based on a set of predefined rules. The most famous version of ELIZA, known as DOCTOR, mimicked a Rogerian psychotherapist, reflecting the user’s input with non-directive responses.

While ELIZA demonstrated the potential of chatbots, its limitations were evident. The rule-based approach lacked understanding of the context and semantics of user input, resulting in responses that were often superficial and repetitive. Moreover, the system required extensive manual labor to create and maintain the rules, which hindered scalability.

Statistical Models: The Emergence of Machine Learning

The shortcomings of rule-based systems led to the emergence of statistical models in the late 20th century. These models leveraged machine learning techniques to analyze and learn from large corpora of text data, enabling chatbots to generate more contextually relevant responses. Approaches included n-grams, Hidden Markov Models, and Latent Semantic Analysis, with n-grams modeling the probability of a word occurring based on the preceding words in a sequence.

While statistical models improved the quality of chatbot responses, they still struggled with understanding complex language structures and capturing long-range dependencies between words. Additionally, the reliance on handcrafted features limited the ability of these models to generalize to new scenarios.

Deep Learning Revolution: The Advent of Neural Networks

The advent of deep learning and neural networks marked a significant leap in chatbot development. Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks were introduced to address the limitations of statistical models. RNNs are designed to maintain a hidden state that can capture information from previous time steps, while LSTMs are a specific type of RNN that can learn long-term dependencies in text data. These architectures enabled chatbots to learn complex patterns and capture long-range dependencies, resulting in more coherent and contextually appropriate responses.

The introduction of word embeddings further enhanced the capabilities of neural network-based chatbots. These dense vector representations captured the semantic relationships between words, allowing models to better understand the meaning and context of user input.

The Transformer Era: BERT and Beyond

The transformer architecture, introduced by Vaswani et al. in 2017, revolutionized NLP and chatbot development. Transformers utilized self-attention mechanisms to process input sequences in parallel, rather than sequentially. This approach enabled faster training and improved handling of long-range dependencies.

One of the most influential transformer-based models is BERT (Bidirectional Encoder Representations from Transformers), which demonstrated groundbreaking performance on a wide range of NLP tasks. BERT’s bidirectional training allowed it to learn deep contextual representations, significantly improving the understanding of language semantics. Other notable transformer-based models include GPT and GPT-2, which also contributed to the advancements in chatbot capabilities.

ChatGPT: The State-of-the-Art in Chatbot Development

Building upon the successes of transformer-based models, ChatGPT (Chatbot Generative Pre-trained Transformer) represents the current state-of-the-art in chatbot development. As a large-scale pre-trained language model, ChatGPT is trained on diverse text data using unsupervised learning. It leverages the power of transformers and fine-tuning techniques to generate coherent, contextually relevant, and human-like responses.

The key innovation in ChatGPT lies in its ability to generate text by conditioning the model on a given context and adjusting the parameters to control the output. This approach allows ChatGPT to generate diverse responses while maintaining a high degree of relevance and coherence. Examples of ChatGPT’s capabilities include providing detailed answers to user queries, generating creative content, and engaging in multi-turn conversations with users.

Conclusion

The progression of chatbot development from ELIZA to ChatGPT reflects the remarkable advancements in NLP and machine learning over the past 60 years. From rule-based systems to deep learning and transformer architectures, each stage has contributed to a deeper understanding of language and the development of more sophisticated chatbot technologies.

As the field continues to evolve, we can anticipate further breakthroughs that will push the boundaries of human-machine interaction and revolutionize the way we communicate with technology. However, challenges remain, such as addressing biases present in training data, ensuring the ethical use of chatbots, and improving the ability of chatbots to understand and generate more nuanced responses. These areas for future research will undoubtedly shape the next wave of advancements in chatbot development.

![Python Decorators Unleashed [eBook]](https://datasciencehorizons.com/wp-content/uploads/2023/10/python_decorators_unleashed_ebook_header-150x150.webp)