Introduction

Database normalization is the process of organizing data in a database to reduce data redundancy and improve data integrity. This practical guide covers the basics of normalization, including the different normal forms such as 1NF, 2NF, and 3NF, and provides examples of unnormalized and normalized databases. It also explains how normalization helps eliminate modification anomalies and covers denormalization and when it may be useful despite the reduction in normalization. The history, evolution, practical applications, and some modern considerations of database normalization are also explored.

Database normalization is an important concept for database and application developers to understand. Normalization helps organize data efficiently, eliminate data redundancy, and ensure data integrity. By following proper normalization techniques, developers can build robust and maintainable databases and applications. The origins of normalization can be traced back to the 1970s, and it has evolved to address the burgeoning data demands of modern applications.

Goals and Benefits of Normalization

The primary goals of normalization are to:

- Eliminate redundant data

- Ensure data dependencies make sense

- Protect the database against anomalies

- Improve data integrity

- Simplify queries

Some key benefits include:

- More efficient data storage and reduced disk space usage

- Improved data integrity and consistency

- Simpler, less redundant data models

- Fewer anomalies during modification

- Easier to add, modify, and delete data

- Simplified queries with faster retrieval

Normal Forms and Normalization Levels

Normalization organizes data by progressive levels called normal forms. Each level builds on the previous one, providing more structure and constraints.

First Normal Form (1NF)

- Atomic values – no repeating groups

- Separate tables for unrelated data

- Primary key defined for each table

Second Normal Form (2NF)

- Meets all 1NF requirements

- No partial dependencies – all non-key attributes fully dependent on the entire primary key (especially relevant when the primary key is composite)

Third Normal Form (3NF)

- Meets all 2NF requirements

- No transitive dependencies – non-key attributes directly dependent on primary key, not other non-keys

Higher normal forms exist, like Boyce-Codd Normal Form, which addresses certain specific scenarios beyond 3NF. However, most databases only normalize to 3NF.

Anomalies Explained

Anomalies refer to inconsistencies that can occur during data modification. Insertion anomalies happen when certain information cannot be inserted without other information. Deletion anomalies occur when deleting data inadvertently removes other valuable data. Update anomalies are inconsistencies that occur when data is changed or updated. Normalization helps to eliminate these potential pitfalls.

Examples of Normalization

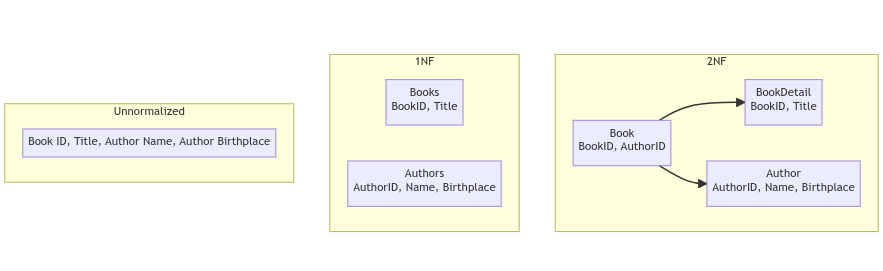

Let’s look at some examples to illustrate how normalization transforms an unstructured design into a well-structured normalized database. Practical scenarios from various industries can also shed light on how these principles are applied in real-world contexts.

Unnormalized Form

Here is an unnormalized table storing book and author data:

Book ID | Title | Author Name | Author Birthplace

———————————————–

1 | SQL Basics | John Doe | Boston

2 | DB Design | Jane Smith | Chicago

3 | SQL Queries | John Doe | Boston

This unnormalized table displays data redundancy – John Doe’s name and birthplace are repeated. It also has multiple entity types in one table, storing both book and author details.

First Normal Form (1NF)

To satisfy 1NF, we’ll split this into two tables – Books and Authors. Each table has its own primary key and contains related data only.

Books

————-

BookID | Title

————-

1 | SQL Basics

2 | DB Design

3 | SQL Queries

Authors

————-

AuthorID | Name | Birthplace

————-

1 | John Doe | Boston

2 | Jane Smith | Chicago

Second Normal Form (2NF)

To demonstrate 2NF, consider a scenario where a composite primary key exists. In that case, all non-key attributes should fully depend on the entire composite key, without partial dependencies.

Third Normal Form (3NF)

No table has transitive dependencies, so the database satisfies 3NF. This well-structured normalized design eliminates data anomalies and redundancies.

When to Denormalize

In some cases, controlled denormalization – intentionally reducing normalization for performance gains – may be beneficial despite the risks.

Denormalization can optimize read performance since joins are avoided. It may help simple parallel processing and replication. Fewer tables and foreign keys can mean faster writes.

However, denormalization leads to data repetition and all the drawbacks that normalization aims to solve. It increases anomaly risks and data integrity issues.

Denormalization requires careful analysis and should only be used sparingly when the performance gains outweigh increased maintenance and data integrity risks.

A visualization of our example through the normal forms

Tools and Technologies

In the world of database management, various tools and technologies play a crucial role in facilitating the normalization process. Entity-Relationship (ER) modeling tools, for example, provide a visual way to design the structure of a database, allowing for a clear depiction of entities, attributes, and relationships. This graphical representation helps database designers understand the inherent structure of the data and identify opportunities for normalization.

Database Management Systems (DBMS) also play a vital role in enforcing normalization principles. Modern DBMSs often come with built-in features and functions that guide the design process, ensuring that the database adheres to the desired normal form. These systems provide validation checks, constraint enforcement, and other mechanisms to prevent violations of normalization rules.

Moreover, specialized tools and libraries are available to assist with various aspects of normalization. These tools can automate some of the tasks involved in normalizing a database, such as identifying functional dependencies or suggesting table partitions. By leveraging these technologies, database designers can more efficiently create and maintain well-structured, normalized databases.

The utilization of these tools and technologies not only streamlines the normalization process but also helps ensure that the resulting database design is robust, maintainable, and aligned with best practices. They serve as essential aids in the complex task of organizing data optimally, contributing to the overall success of database management efforts.

Challenges and Common Mistakes

Database normalization is a nuanced and often complex process that requires careful planning and execution. One common challenge faced by database designers is over-normalization. While normalization aims to eliminate redundancy and increase integrity, taking it to an extreme can result in excessive complexity. Over-normalized databases can lead to a large number of tables with intricate relationships, making queries and maintenance more challenging.

Under-normalization, on the other hand, can lead to redundancy and inconsistency. If normalization principles are not adequately applied, the resulting design may contain duplicated data, leading to potential inconsistencies and increased storage requirements. Striking the right balance between over- and under-normalization is a delicate task that requires a deep understanding of the data and its intended use.

Another common mistake is neglecting to consider the specific needs of the application or system using the database. Normalization decisions should align with the anticipated query patterns and performance requirements. A design that is theoretically well-normalized but misaligned with the actual usage patterns can lead to suboptimal performance.

Additionally, the evolving nature of data and business requirements can introduce challenges in maintaining a normalized design over time. Continuous monitoring, periodic reviews, and adaptability are essential in ensuring that the database structure remains effective and aligned with current needs.

Awareness of these common challenges and mistakes, coupled with thoughtful planning and ongoing attention to the unique characteristics of the data and system, can guide a more effective and successful normalization process.

Impact on Modern Databases

The advent of modern database systems, including NoSQL databases, has brought new dimensions to the concept of normalization. While traditional relational databases rely heavily on normalization principles, NoSQL databases often approach data organization differently.

In NoSQL databases, the emphasis may be more on horizontal scalability and flexible schema design. Normalization principles that apply strictly in a relational context may not be directly transferable to NoSQL environments. For example, denormalization might be more commonly used in NoSQL databases to optimize read performance, even at the cost of some redundancy.

Furthermore, the diverse types of NoSQL databases, such as document-based, column-family, or graph databases, introduce different considerations for data organization. The principles of normalization may be applied differently or adapted to suit the specific characteristics of these database models.

Understanding the impact of modern database systems on normalization requires a broader perspective that considers the varying needs and characteristics of different data storage paradigms. It involves recognizing that the principles of normalization are not one-size-fits-all but must be interpreted and applied in the context of the specific database technology and the unique requirements of the application.

The interplay between traditional normalization principles and the evolving landscape of modern database technologies offers a rich and complex field of study. It reflects the ongoing evolution of data management practices and the need for database professionals to adapt and innovate in the face of changing technologies and demands.

Conclusion

Database normalization remains a vital strategy in contemporary database management. Whether you are a seasoned database administrator, a software engineer, or someone starting to explore the world of databases, understanding and leveraging database normalization can lead to a more efficient and scalable data management system. This comprehensive guide serves as a valuable resource for mastering this essential aspect of modern database management.